Technical SEO Best Practices

Technical SEO Best Practices

Achieving Optimal Website Performance

In today’s digital landscape, where online presence is crucial for businesses, search engine optimization (SEO) plays a pivotal role in driving organic traffic and enhancing visibility. In this comprehensive guide, we will delve into the realm of technical SEO and outline the best practices that can help your website outperform competitors in.

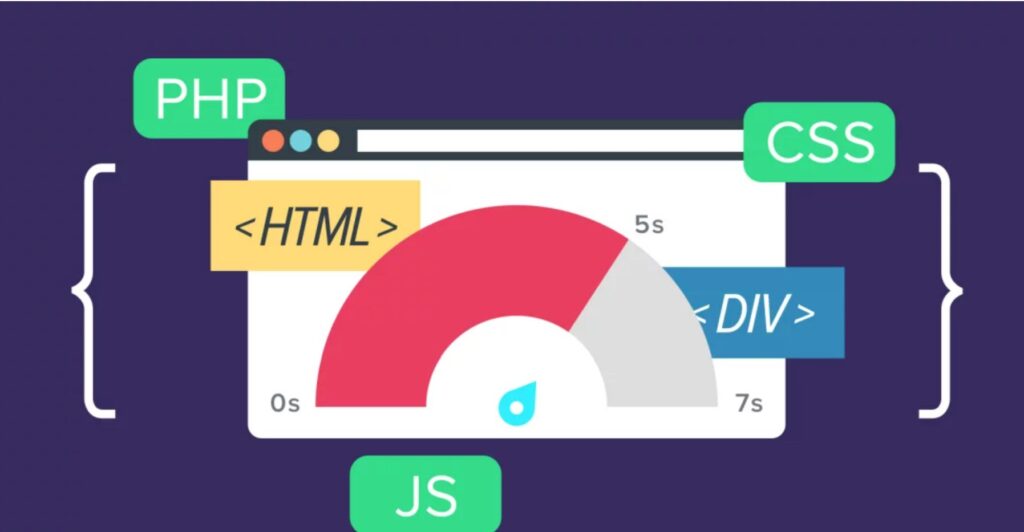

Website Speed Optimization

A crucial aspect of technical SEO is optimizing your website’s speed. Site speed directly influences user experience, bounce rates, and ultimately, search rankings. To ensure optimal website performance, consider the following steps:

Minimize HTTP Requests

Reducing the number of HTTP requests can significantly enhance website loading speed. Combining CSS and JavaScript files, optimizing images, and leveraging browser caching are effective methods to minimize requests.

Implement Content Delivery Networks (CDNs)

CDNs distribute your website’s static content across multiple servers worldwide, reducing latency and delivering a seamless experience to users. This global reach can accelerate page load times, particularly for international visitors.

Enable Gzip Compression

Enabling Gzip compression reduces file sizes during transmission, resulting in faster loading times. Compressing HTML, CSS, and JavaScript files can have a dramatic impact on website performance.

2. Mobile-Friendly Design

With the proliferation of smartphones and tablets, optimizing your website for mobile devices is imperative. Google prioritizes mobile-friendly websites in search results, making mobile optimization a critical factor for outranking competitors.

Responsive Web Design

Implementing a responsive web design ensures that your website adapts seamlessly to different screen sizes and resolutions. This approach offers a consistent user experience across devices and satisfies Google’s mobile-friendliness criteria.

Accelerated Mobile Pages (AMP)

Consider implementing Accelerated Mobile Pages (AMP) to create lightweight, ultra-fast loading versions of your web pages. AMPs are specifically designed for mobile devices and can significantly improve mobile search rankings.

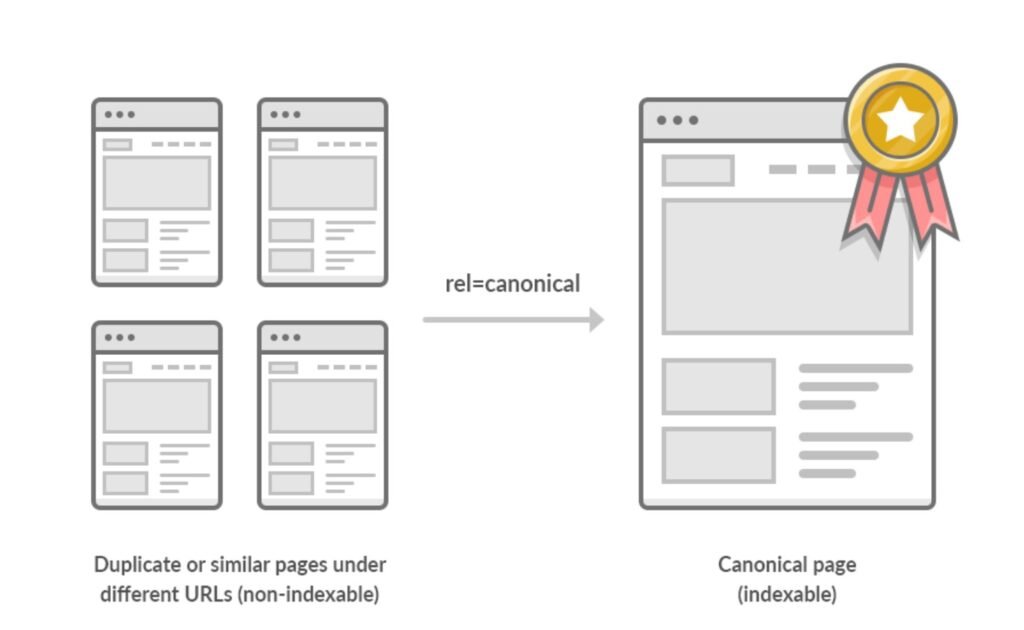

3. URL Structure and Canonicalization

Optimizing your website’s URL structure and canonicalization helps search engines understand your content hierarchy and avoid duplicate content issues.

Keyword-Rich URLs

Craft descriptive URLs that incorporate relevant keywords, providing search engines and users with an immediate understanding of the page’s content. Avoid lengthy URLs and utilize hyphens to separate words for improved readability.

Canonical Tags

Canonical tags are essential for specifying the preferred version of a webpage to search engines. Implementing canonical tags correctly ensures that any duplicate content is properly attributed and doesn’t dilute your search rankings.

4. Schema Markup and Structured Data

Schema markup and structured data provide search engines with additional context about your website’s content, enabling them to display rich snippets in search results.

Implementing Schema Markup

Leverage schema markup to provide search engines with structured data that describes your content. This can enhance visibility and enable rich snippets such as star ratings, reviews, and other valuable information to appear alongside your search results.

Structured Data Testing

Before deploying structured data on your website, utilize Google’s Structured Data Testing Tool to ensure that it is implemented correctly. This tool helps identify any errors or warnings that may affect how search engines interpret your structured data.

5. XML Sitemaps and Robots.txt Optimization

XML sitemaps and robots.txt files are vital components of technical SEO that assist search engines in understanding your website’s structure and crawling it efficiently.

XML Sitemaps

Create and submit XML sitemaps to search engines to facilitate the discovery and indexing of your website’s pages. Ensure that your sitemap includes all essential pages and adheres to XML sitemap standards.

Robots.txt Optimization

Optimizing your website’s robots.txt file is crucial for instructing search engine crawlers on how to navigate and index your website effectively. By utilizing the robots.txt file, you can control which pages search engines should crawl and index, ensuring optimal visibility and preventing the indexing of sensitive or irrelevant content.

Southern sages

http://#SEO Expert